People often ask me this. It’s a good question. It seems potentially important for anyone who doesn’t want to die, or doesn’t want other people to have to die. I don’t feel like I have a good answer, which is one reason I want to do more research. But recently I think my answer has gotten a little better and I want to explain why.

A superficially similar question, “Does brain preservation work?” is also often asked, and I can see why people ask this, too — but it’s a question that can only be answered in the future. Brain preservation is not suspended animation. It’s about attempting to preserve the molecular structure of the brain — the physical substrate that encodes our memories and identity — in hopes that future technology might be able to extract and use that information. So people who say “brain preservation [or cryonics] doesn't work” are either confused or making a very strong claim about neuroscience (that molecular structure doesn’t encode identity/memories) and/or future technology (that we can know with certainty what future technology won’t be able to do), which as far as I know is not justified.

The more incisive question is not “Does it work?” but rather “What is the probability that it will work, for any given person?” This, in turn, can be broken down into several more specific probabilities. Here is one way to do so:

1: The probability that the preservation procedure in the ideal case retains sufficient information for survival, including the structures encoding long-term memories and personality.

2: The probability that the way in which any one particular case differs from the ideal is not so damaging as to destroy the information (e.g., it not taking too long from legal death until the preservation procedure begins, and the initial preservation procedure being performed in a sufficiently high-quality manner).

3: The probability that the preserved brain remains preserved long-term (e.g. sufficient organizational robustness, legal protections, and maintenance performed).

4: The probability that civilization survives for a long enough time (i.e. no extinction events, and technological civilization being maintained to a sufficient degree). Obviously, people’s opinions wildly differ here.

5: The probability that civilization develops revival technology (i.e. there is a sufficient degree of advancement over time in e.g. molecular nanotechnology or whole brain emulation. Or, if one is focusing on one revival strategy, then specifically in that technology pathway).

6: The probability that revival technology is used to revive a particular preserved person (e.g. that revival is permitted, and that preservation organizations or others have the will and resources to perform it).

At first glance, one might be tempted to estimate these as independent probabilities and then multiply them together. However, these factors are actually highly correlated, particularly through probability #1 — whether the preservation procedure can retain sufficient information in the best case scenario.

Consider that while brain preservation is still a tiny field, it has been growing over time at around 8-9% per year. If probability #1 is truly high — that is, if one or more contemporary preservation procedures really do maintain the critical information — then this growth is likely to continue or accelerate as evidence accumulates. With more scale, this would likely lead to more professionalization for improving preservation quality (#2), stronger organizations (#3), greater societal investment in developing revival technologies (#5), and a higher likelihood of social acceptance and resources being available for revival (#6).

Conversely, if probability #1 is truly low — i.e., if fundamental information is being lost in even the best cases — this would likely become apparent over time through research in neuroscience. There would finally be detailed technical critiques of brain preservation (of which there are none currently). This would likely cause the field to contract, organizations to weaken, and technology development to slow or stop. In my read of the history of science, eventually the truth of these kind of questions has tended to win out.

This means that while these probabilities are technically distinct events, they’re highly dependent on whether the basic premise of brain preservation is sound. This makes probability #1 particularly crucial to investigate. And historically, this has been one of the most hand-wavy aspects of the field. While researchers such as Mike Darwin or Ken Hayworth have provided their own analyses, it is unclear how much the public can or should trust any one individual’s opinion.

This year, Ariel Zeleznikow-Johnston, Emil Kendziorra, and I teamed up to do a survey on memory and brain preservation that — inter alia — tried to address this by asking neuroscience experts what they think about this question. We had 312 respondents. Here is our preprint, and here is a link to the questions and survey results. Although the survey was a team effort, the conclusions in this essay are my own, and any errors or overly strong claims are my fault alone.

We provided the respondents with published findings about the best-validated structural brain preservation technique known to date, aldehyde-stabilized cryopreservation (ASC). We then asked them: “What is your subjective probability estimate that a brain successfully preserved with the aldehyde-stabilized cryopreservation method would retain sufficient information to theoretically decode at least some long-term memories?” Here is the distribution of probability estimates:

The median estimate was 41%. Interestingly, it seems to be a bimodal distribution, with one peak at around 10% and one peak at around 75%.

Why not 100%? One way to assess this is to look at the other answers that survey respondents gave. It turns out that the answers to this question (“ASC probability”), are highly correlated with the answers to two other dispositive questions: (a) how likely it is that a whole brain emulation could be created from a preserved brain without prior electrophysiological recordings from that animal (“Emulation w/o in vivo”) and (b) whether it is theoretically possible that long-term memory-related information could be extracted from a static snapshot of the brain (“Theoretical Possibility”):

You can evaluate the survey data and come to your own conclusions, but to me it seems that the major critique of aldehyde-stabilized cryopreservation that respondents had is that preserving static structural information alone is insufficient. In other words, they are claiming that we would also need to capture “dynamic” measurements of each brain in action, such as neural electrophysiological data from each person’s brain — rather than just doing a sufficient number of these measurements as “side experiments” to figure out how the brain’s structure-function relationships work in general. This echoes a previous Twitter debate about brain preservation among neuroscientists from 2019.

Personally, it seems to me this critique is very unlikely to be correct. Long-term memories and personality traits must be encoded in stable physical patterns in the brain. If they weren’t, they wouldn’t survive cardiac arrest. They also probably wouldn’t be stable over a period of years. Unless there is something special about the brain, it seems that function must follow from structure.

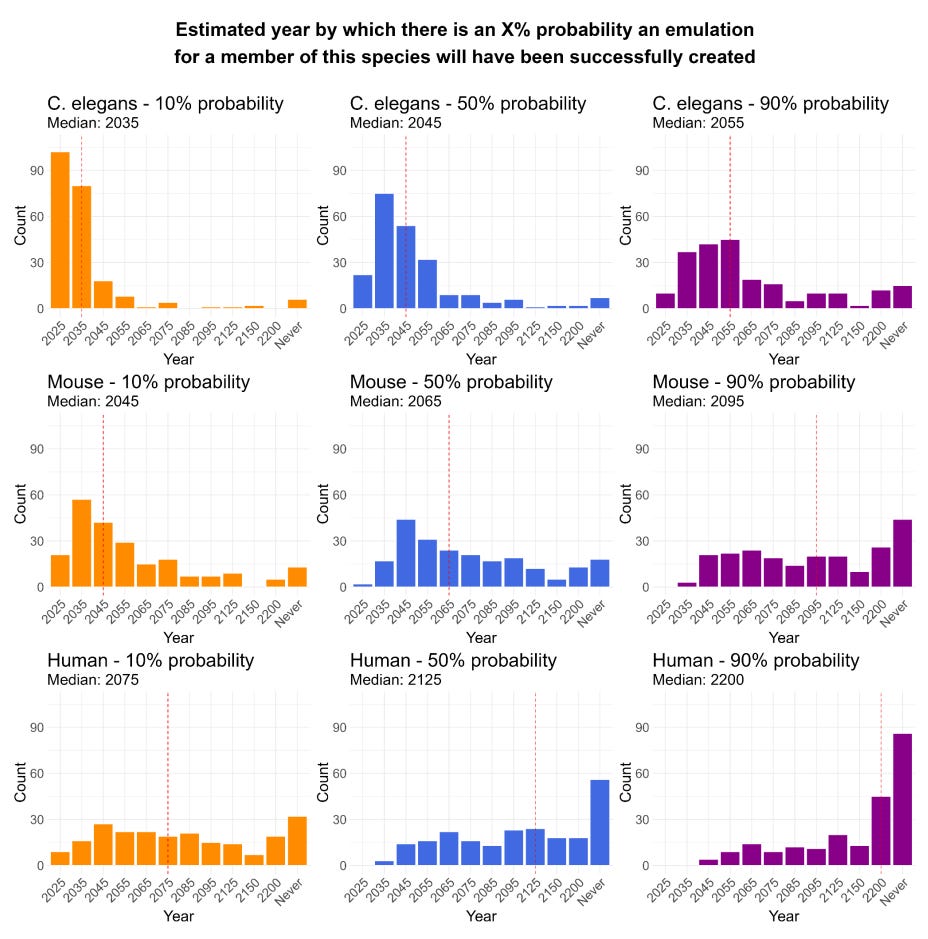

My personal guess is that, over the coming years and decades, there will be more and more studies where scientists simulate and eventually decode more and more neural information from static brain measurements. You can see some preliminary work in this area at the Aspirational Neuroscience website. Over time, I predict that the “dynamic information is necessary to capture” opinion will be shown to not hold true for more and more examples of neural circuits, eventually building up to emulations1 — which the surveyed experts mostly thought would eventually happen:

This could mirror a pattern we’ve seen in the machine learning field. Some initially argued that deep learning models would never be able to “understand” concepts because they were fundamentally just static patterns of weights stored in computer memory and used during model execution. Yet these theoretical objections were eventually superseded by empirical findings that LLMs have sophisticated reasoning and knowledge manipulation. So people don’t make this argument much anymore.

Taken together, while these expert estimates are far from conclusive, they suggest that reasonable estimates for probability #1 — whether preservation can retain critical information in the best case — are non-trivial. My own subjective probability estimate is higher than the median. Definitely not 100%, as I can see potential failure modes at the molecular scale — like alterations to biomolecular conformation or precise configurations at synapses during preservation. However, the redundant nature of neural information storage makes me think it is very unlikely that the degree of such changes likely to occur during preservation would be catastrophic.

That said, I think it might make sense for others to rely on the median estimate of 41% when making their own predictions, rather than using mine. I would, however, advocate for this survey or something like it to be redone in the future, so that we can see whether opinions of the neuroscience community have shifted at all. Because I do expect opinions to shift towards mine as more evidence accumulates.

My personal view is that the biggest problem in the field of brain preservation is not ideal-case preservation quality, or long-term preservation maintenance (which has become more robust after the tragic early failures in cryonics, and is also made more secure via aldehyde fixation), but rather its practical implementation for any given person (probability #2). Whether it is legally dying alone and not being found for days, having one’s brain sectioned into pieces by a medical examiner, or unavoidable delays preventing adequate perfusion, there are so many ways that the preservation procedure can be compromised.

This is not even getting into the fact that some people who want it can’t access preservation at all, either because they can’t afford it, it isn’t available in their area, interference by their family, or many other reasons.

Alternatively, I could be wrong about this for one reason or another. I try hard to be willing to change my mind, and I’m always open to critiques. But if I’m right, we may eventually look back and view it as wasteful to not have put in the work to offer high-quality structural brain preservation to more people.

Disclosure: I work at Oregon Brain Preservation, a non-profit organization which offers brain preservation services. This survey was supported by a grant from CryoDAO.

This essay might make it seem like I think that only the whole brain emulation method for revival will be possible, which may naturally emerge from such memory decoding experiments, as they become larger and are performed on more complicated neural circuits. However, that is not my opinion. It might take longer, but arguing that the molecular nanotechnology approach to revival in particular will never be possible requires one to argue that molecular nanotechnology will never be developed, even hundreds of years in the future, when emulated humans can contribute to the process of vastly accelerating technological progress. And I don’t think that is likely.

I'm surprised by the number of neuroscientists who don't think structural preservation is enough. That seems to contradict much of what we know.